Photo by İsmail Enes Ayhan on Unsplash

THE NEED TO PROCESS DATA

Internet usage is growing. Every new user generates a new type of data. The technology companies are always eager to process and understand new consumer behavior. It requires continuous research and development of both the software and the hardware.

Software development has advanced in the last two decades. It has kept pace with the need to understand and process data due to the development of software libraries and frameworks. The large amount of data that has generated post-2010 has helped the Deep Learning (DL), Machine Learning (ML), and Artificial Intelligence (AI) frameworks train networks, and that is now allowing new data to be processed faster and accurately.

Hardware is vital in ensuring that the processing of data using training and prediction frameworks occurs in the shortest time possible. It requires a massive amount of computing. The majority of the technology companies now rely on massive data centers equipped with advanced computer architectures.

BIG-5 – Facebook, Amazon, Apple, Microsoft, Google – FAAMG

To fully utilize computer architectures, an in-depth architecture-level understanding is required. It is not always possible to do so, as the data centers still run general-purpose computer architectures that do not cater to different types of data the big technology companies have to process.

The disconnect between the software, the hardware, and the data has promoted the need to move from General-Purpose SoC To Application-Specific SoC. Not all data companies are capable of setting up a dedicated team that can focus on in-house silicon development to come up with an Application-Specific SoC.

To overcome the reliance on semiconductor companies, the BIG-5 (FAAMG) technology companies have started (or have already developed) developing in-house SoC with the hope of opening up the silicon to different data companies around the world.

THE PUSH FOR IN-HOUSE SILICON

Two major factors drive the push to develop new computer architectures (silicon):

- Memory

- Parallel computing

Memory:

- Modern applications are becoming memory intensive and also demand faster computation. To process requests from memory-intensive applications in the shortest possible time, the data needs to reside closer to the processing unit.

- The time to bring the data from SSD to DRAM to Cache adds cycles and delays processing of the data. To overcome such bottleneck, semiconductor companies have implemented the following three techniques:

- Cache Prefetching:

- Bring the data near the processing unit in advance to minimize cycle time

- Increasing Level Of Cache:

- Add Leve-1 (L1), Level-2 (L2 – Shared), and Level-3 (L3 – Shared) small (KB/MB) cache memory to improve memory prefetching speed

- Enable High Bandwidth Memory:

- An extra layer of large high-speed memory between Last Level Cache (LLC – Either L2 or L3) and DRAM to speed up prefetching

- Cache Prefetching:

- All the above three techniques improved the response time of processing units. However, as the application data started growing, the cache and memory trashing became a new hurdle.

- Multiple processing units sharing the same level of memory started corrupting each other’s data to process the request faster. On top of all this, the lack of interconnect bandwidth added further bottlenecks.

Parallel Computing:

- Apart from being memory intensive, applications have become compute-intensive too. It prompted the need to have multiple processing units within the same SoC. Running multiple data requests on a single processing unit or two separate processing units provided a way to accomplish the task in the shorted possible time.

- The processing units still relied on the low-level memories to bring the data to be processed quickly. It means new SoC designing techniques that can allow the sharing of cache and high bandwidth memories in elegant ways without compromising on the need to add latency.

- Adding more processing units to a single SoC is not the solution. On top, the developers have to keep comping up with smart ways to distribute the data to multiple SoC to speed up the processing.

- Distributed computing is what the majority of the technology companies have adopted to ensure the data is processed quickly. It means a massive number of servers with thousands of SoC and a large amount of memory. Over time this has increased the cost of operating data centers.

Even though in the last decade, semiconductor companies have come up with unique computer architecture to cater to both memory and compute-intensive applications, it has not been enough to adopt the changing processing requirement of BIG-5.

The need to handle memory and parallel computing demand by modern workloads and applications efficiently at the architecture level has pushed BIG-5 to go for in-house silicon.

THE STATUS OF IN-HOUSE SILICON

BIG-5 has been gearing towards the development of adaptive computer architecture for data and operating systems.

Facebook:

Facebook started working on in-house silicon a couple of years back. With a growing user base across multiple platforms (Instagram, WhatsApp, Messenger), Facebook ramped up silicon effort last year.

They have a silicon team that is focusing on Application-Specific SoC development that not only caters to data centers but also portable devices like Oculus.

Amazon:

Amazon Web Services (AWS) is one of the leaders in cloud solutions. The desire to have customized SoC is vital to ensure the consumers and enterprises can make most of the wide range of computing services AWS provides.

Apart from AWS, Amazon’s growing range of Echo products is also pushing it to drive in-house silicon development. Amazon is betting big on ARM architecture to drive its silicon needs.

Apple:

Apple was always into silicon development. This year with the Apple M1 launch, they are making big bets on in-house silicon development that caters well to their need.

With Siri about to become the default search option on all the Apple devices, the need to have data-centric customized silicon will grow.

Microsoft:

Microsoft always had a keen interest in hardware. They already have a strong team of researchers focusing on hardware research. The Surface line of products has shown strong growth, and the SQ1 line of SoC establishes Microsoft’s goal of making Windows smoother to use on silicon.

Recently, Microsoft also announced a plan to develop Secure Chip with the help of semiconductor giant Intel and AMD.

Google:

Like Microsoft, Google also has a dedicated team that has heavily contributed to silicon development via different computer architecture domains. They have already announced plans to develop in-house silicon for Pixel and Chromebook devices.

A few years ago, Google showcased the world Tensor Processing Units (TPUs) to speed up the training of data set using the TensorFlow framework. Google’s latest data shows they have been successful in doing so.

THE POSSIBLE FUTURE SCENARIOS

BIG-5 is betting big on in-house silicon development. This requires not only years of planning and investments but also a dedicated semiconductor development team and flow chain. Going forward there are two possible scenarios that BIG-5 might take:

Scenario 1:

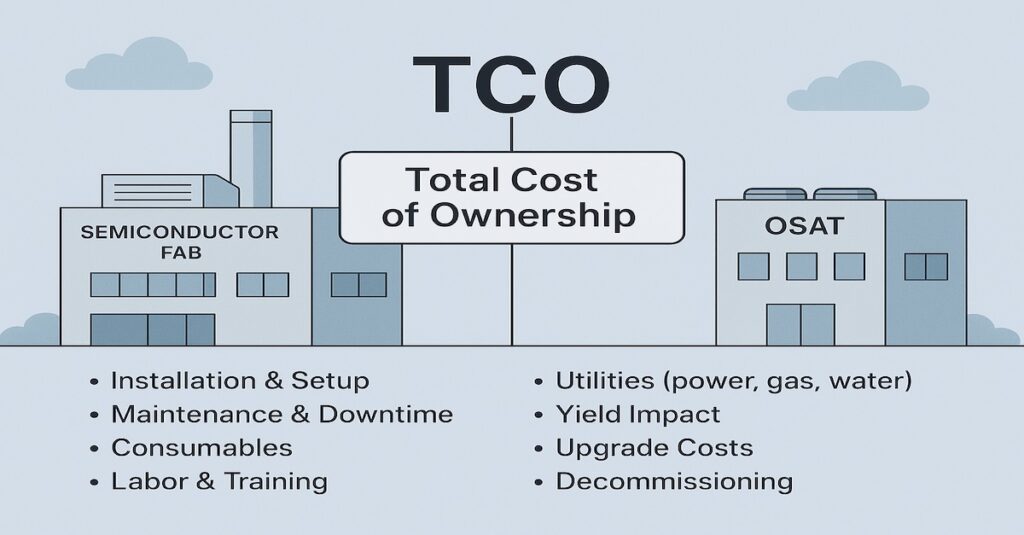

BIG-5 will keep collaborating with semiconductor companies (Intel, ARM, AMD, and Qualcomm) to design silicon for their products and data centers with strict control over features and the manufacturing process. It will enable BIG-5 to enter the in-house FAB-LESS business model.

Scenario 2:

BIG-5 will slowly move away from semiconductor companies and spin-off an in-house team with a full turnkey silicon development chain. It will be more like an IDM business model and might require the acquisition of existing semiconductor manufacturing units.

The probability of the second scenario occurring soon is unlikely. In a decade or so, BIG-5 may go big on the semiconductor business and try to keep themselves as in-house FAB-LESS silicon developers (while owning a piece of IDMs/FABs), which will ultimately play in the hands of FAB/Pure-Play Foundries like TSMC and GLOBALFOUNDRIES.

Whichever scenario ends up occurring, there will be exciting developments in computer architectures that will drive the semiconductor industry to new levels.